Week 7 Update

- Tyler Price

- Feb 27, 2023

- 5 min read

This Week

Team Goal: Determine which algorithms/approaches we want to use in the end preprocessing and classification system

Personal Goal: Implement color and fill identification

This week I focused on implementing color and fill classification algorithms. But before I did either of these things, I wanted to make shape counting (from last week) more consistent across different lighting conditions. I learned OpenMV had a brightness and contrast normalization function called histeq(), which seemed to help increase contrast on previously low-contrast cards (namely empty fills, especially the green ones). I had to tweak some of my thresholds from last week, but it seemed to work pretty consistently after this adjustment.

Another common point of failure I noticed with shape counting occurred when a lot of glare made it through the thresholding, which would manifest as several white pixels on either the top or bottom of the card, usually making a card with 1 shape get counted as having 3.

To solve this, I used two more masks, one with the top third blacked out, and the other with the bottom third blacked out. I checked the deltas between these two whenever a card is about to be classified as having 3 shapes. Ideally, cards would have the same number of pixels in the top third as the bottom third. When glare gets into the picture, however, this isn't the case. When a large delta is detected, I count the card as having 1 shape.

Now, onto color detection. I took pictures of several cards with the OpenMV board and had it output the mean value for each channel. The table to the left shows a handful of cards I analyzed.

I empirically determined some thresholds from these values to determine which color a card is. Detecting green is easy: all green cards have small A channel values, which makes sense since A in the LAB color space spans green (-128) to red (127). Any shape with an A channel value less than 2 is classified as green.

Separating purple from red proved a bit more tricky, particularly for empty and striped shapes. For most solid shapes, red tends to have a high B channel value while purple has a low B channel value. For the empty and striped shapes, however, there was a bit of overlap. The tiebreaker then ends up being the L channel: the purple shapes usually have a lower L value in instances where shapes have similar B channel values.

I found that for B values between -12 and -4, purple shapes have an L value less than 70, while red shapes will have greater than 70.

Fill detection ended up being trickier. Bradley originally implemented fill detection using OpenCV on the online SET dataset we'd been using for initial development. He did fill classification based on the standard deviations of the different color channels, but he told me this process performed very poorly with the actual card images from the OpenMV board, so I would need to find an alternate approach.

The approach I ended up taking was to consider a small 12x6 region in the middle of the shapes on a card. For now, I only consider one shape on cards with multiple shapes, but in the future, I could consider all of them to make it more reliable. I noticed solid-fill shapes would have a relatively lower mean L-value in this region since there is no white to bring up the average, so I start by classifying shapes with L values less than 60 as solid.

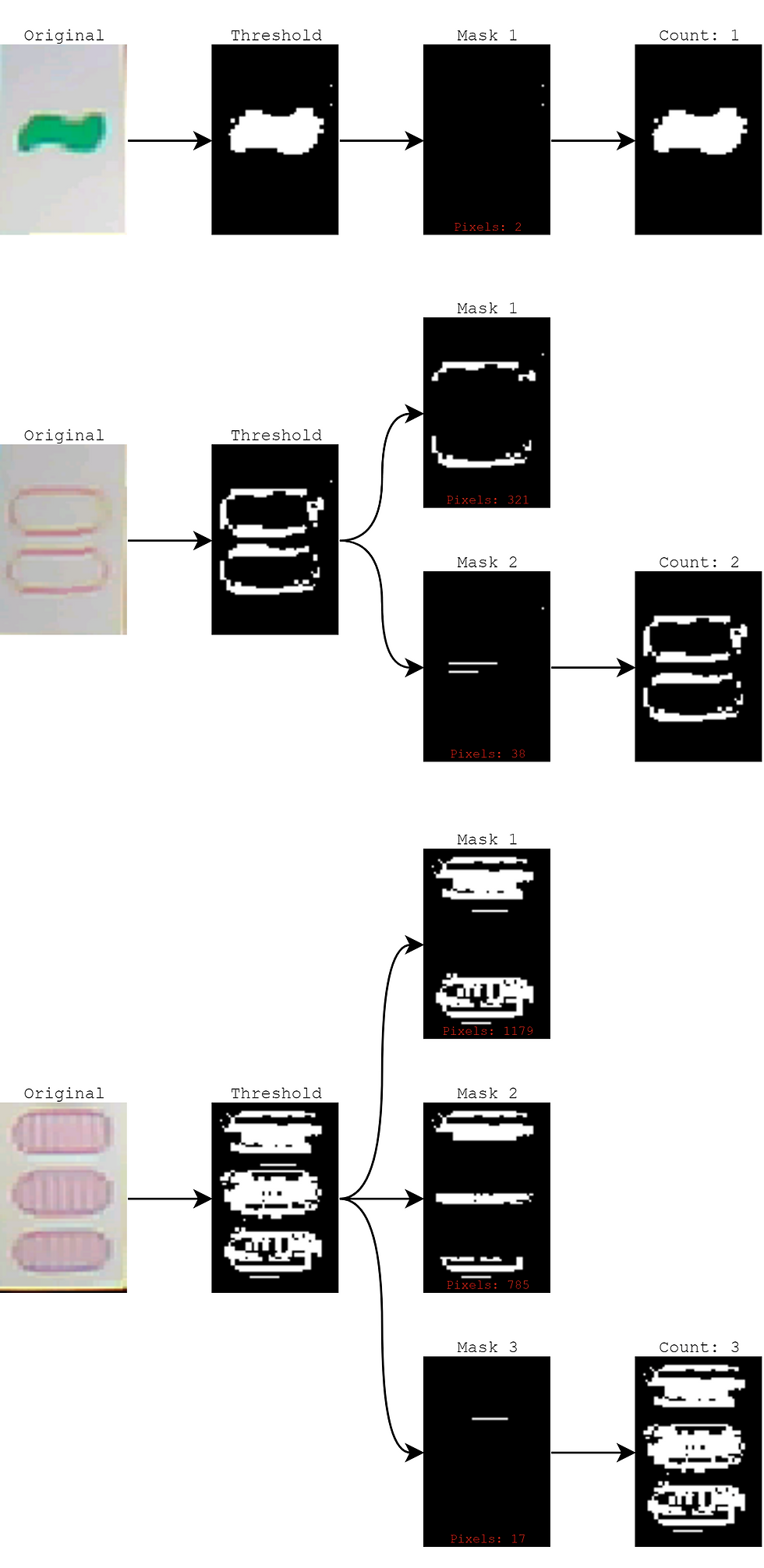

To differentiate between striped and empty shapes, I analyze the thresholded versions of each card. I observed that in the centers of the thresholded cards, empty shapes would have almost all black pixels, while striped cards would have a chaotic mix, and solid shapes would have all white:

I count the number of pixels within the small 12x6 region in the middle of one of the shapes, and if less than 10 pixels are white, I consider the card empty, and striped otherwise. This process works well enough, though I could probably optimize the threshold values a bit more.

Finally, I integrated Bradley's CNN shape classifier with the rest of my code to do all 4 classifications at once. He'd already done all the training, so it was really straightforward to take the tensorflow lite model he provided me and get it working in OpenMV.

Putting all of the classification algorithms together, I obtained the following initial results for the following arbitrary set of 12 cards:

I took three sets of measurements, and the total column shows how many times out of three the card was classified 100% correctly. Some initial thoughts looking at the results:

Color classification appears very robust with 100% accuracy

Shape and count classification seems to work quite well; shape classification will fail whenever a card with 2 shapes is misclassified as having 1 or 3 since the slice of the image input to the CNN won't contain the centered shape. This only happens once in the table above.

Fill classification seems to be the least reliable of the four, particularly struggling with striped shapes. I will need to optimize fill classification before continuing next week.

Overall, the results are promising. Aside from fill classification on certain cards, the cards are classified correctly more often than not, which suggests that in the final version, multiple trials can be taken and the majority classification for each trait will be selected.

Week 7 Milestone Reflection

Our team's week 7 milestones, which remained unchanged at the end of week 3, are as follows:

Algorithm for combined segmentation and classification complete and ported over to the H7

LED matrix is built and controllable through the H7

With the implementation of color, fill, and shape classification on the H7 board this week, the first part of our team's week 7 milestone is successfully completed! Some additional threshold tuning and optimization needs to be done to make it 100% accurate, but the algorithms themselves are finished being ported onto the OpenMV H7 board. The accuracy of the algorithms on an arbitrary set of 12 cards over 3 trials is shown in the table below:

Bradley successfully got an Adafruit LED matrix working with the H7 board and already developed the interface so it is ready to work with the card classification results, which he discusses more in-depth in his own blog post.

In summary, our team successfully achieved all our week 7 milestones. We have classification algorithms for each card trait operating on the H7, and an LED matrix setup to display the results. For the remainder of the quarter, we will be working on thoroughly testing and optimizing the classification steps in order to make it as close to 100% accurate and robust as possible, as well as designing an enclosure and making the system portable using batteries.

Next Week

Team Goal: Begin working on the physical design of the end product

Personal Goal: Improve fill classification accuracy and begin CAD for device enclosure

This week I implemented the remainder of the classification algorithms and obtained some initial figures for accuracy. Going into next week, I plan to make some optimizations to the fill and counting algorithms to make them more robust, as well as begin designing an enclosure for the final product.

OK. looks good. Regarding the striped cards, if they are evenly striped and are always, say, vertically striped, then a 2D FFT would find those stripes and display them as a strong peak in the spatial frequency domain. Perhaps a derivative along a path orthogonal to the direction of the stripes would spice up the 2D FFT.

Well done! I think it should be fairly straightforward to expand the fill algorithm to cover multiple shapes which would hopefully increase its accuracy