Week 2 Update

- Tyler Price

- Jan 25, 2023

- 4 min read

This Week

Team Goal: Run first iteration of respective code assignments

Personal Goal: Isolate a single card from the background

My main goal this week was to successfully localize a single card from the background. This involves identifying the quadrilateral bounding box of each card, warping the image to correct for perspective, and resizing and cropping it into a 60x90 image containing just the card background and the pattern.

I started off researching online to see how others have done playing card localization in the past and found a couple of very helpful sources: a YouTube video where someone explained how they localized regular playing cards from a dark background using OpenCV, and a blog post of someone who created a SET solver using OpenCV.

From my research I determined this type of object localization is most commonly done using the following process:

Convert the image to grayscale. This makes the image simpler to work with as it has fewer channels and colors are irrelevant to finding the card bounding box.

Blur the image. This reduces noise in the image and removes visual artifacts that would interfere with contouring in later steps. Gaussian blurring is particularly good at removing Gaussian noise from images.

Threshold the image. This will set each pixel to either black or white depending on whether its intensity exceeds a certain threshold value.

Draw contours and approximate them as polygons. OpenCV's findContours() and approxPolyDP functions are used to isolate the card boundary contours and approximate them as quadrilaterals.

Apply warp transform. This warps the image so it appears as if viewed head-on instead of skewed from the camera's perspective.

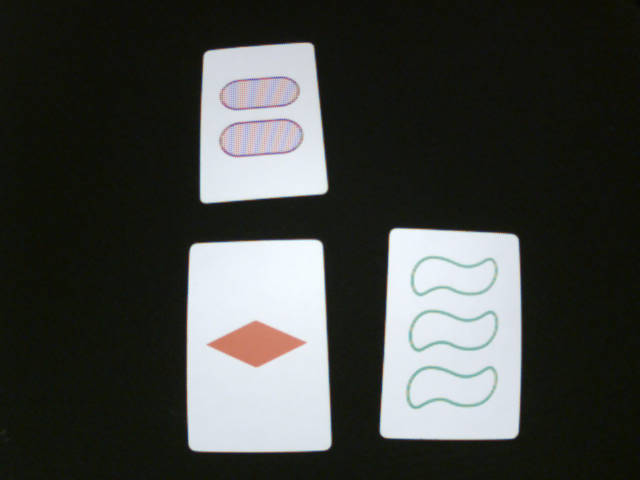

To test out this process, I took the picture below using the OpenMV board. For the remainder of this post all my work is done in a Google Colab notebook.

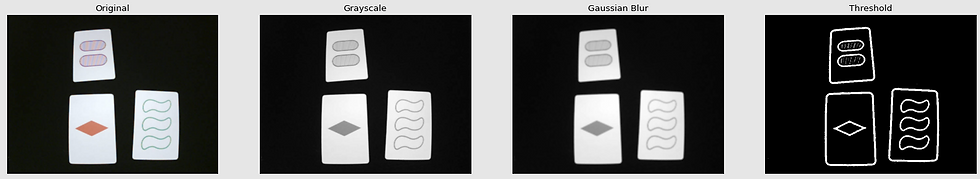

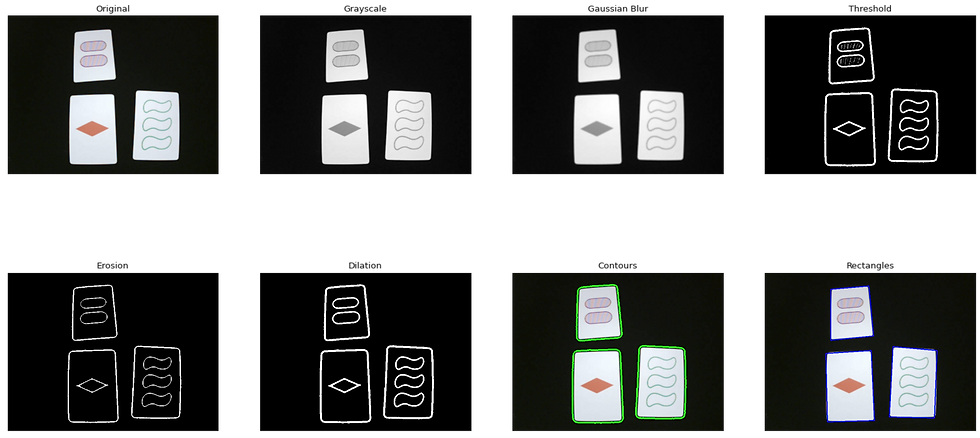

I first tried the first three steps: grayscale, blur, and threshold.

For the thresholding step I used an adaptive Gaussian threshold, which means the threshold for each pixel is the Gaussian weighted sum of the surrounding block of pixels minus a tunable constant.

The advantage of adaptive thresholding is that since the threshold is uniquely calculated for each part of the image, the results will be more consistent across different lighting conditions (e.g. if there is glare or a shadow on one region of the image). I played around with the block size and constant parameters and settled with a block size of 11 and constant of 2.

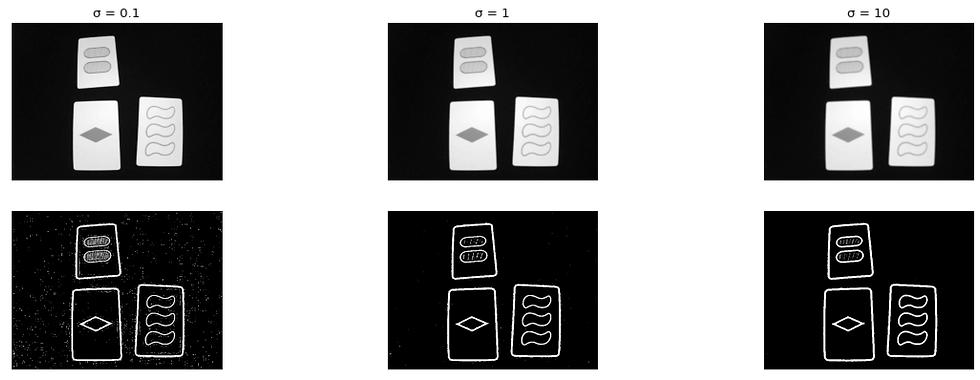

I also tweaked the Gaussian blurring parameters in conjunction with the threshold until I obtained a result that seemed to minimize the number and size of small blotches after thresholding. I tweaked the blurring kernel size and sigma values and eventually settled on a kernel size of 5x5 and sigma of 20. I noticed that having a large sigma value created a very strong blur that removed all extraneous visual artifacts after thresholding.

To ensure there were absolutely no random spots in the image, I did one erosion dilation cycle. Erosion shrinks all high-value regions (in this case white) while dilation shrinks all the low-value regions (in this case black). It works by replacing each pixel with the minimum (erosion) or maximum (dilation) value underneath the kernal. A helpful resource I found on the erosion and dilation technique was this lecture on localization from IEEE at UCLA's Pocket Racer's project starting on slide 47. I arbitrarily chose a 3x3 kernel size for the process, which yielded the below result. Note how all the lines inside the striped ovals have disappeared after erosion.

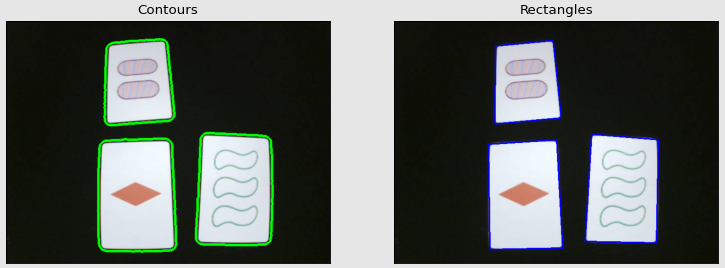

With all the preprocessing done to accentuate the card boundaries, it was finally time to detect contours. First, using OpenCV's findContours() method using the RETR_EXTERNAL retrieval mode, I was able to isolate just the outer boundaries of the cards. RETR_EXTERNAL only finds the extreme outer contours (i.e. not those of the internal shapes), which is sufficient since all I am trying to do now is identify card boundaries.

Next, using approxPolyDP(), I found the quadrilaterals most closely matched with the card contours. This function will find the polygon with the minimum number of points that bests approximates the contour, subject to the parameter epsilon, which is essentially the furthest away the points are allowed to be from the original contour. I found a value of 0.1 times the contour's arclength yielded good results. At low values of epsilon, additional points would be included to adhere the resulting polygon along the rounded corners of the card profiles.

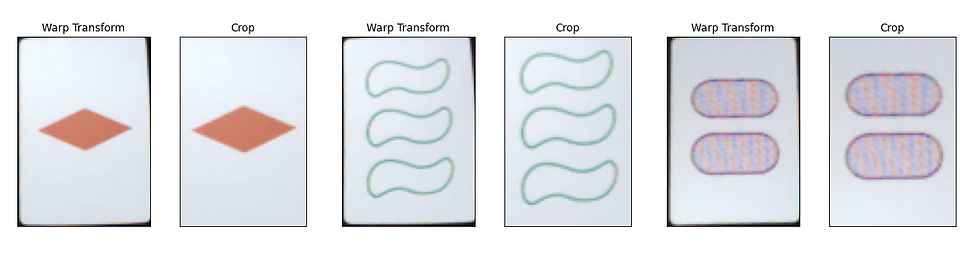

The final step is to warp and resize the images. I used OpenCV's getPerspectiveTransform() and warpPerspective() to correct the perspective skewing on each quadrilateral. I noticed the rectangles still included slivers of the black background behind each card, so I had the warp transform fill a 70x100 area, and then cropped it 5 pixels on each side to obtain a 60x90 image of the card.

I was originally seeing inconsistent orientations for the cards, as pictured below. It turns out approxPolyDP() does not guarantee any particular ordering of the points. To fix this, I wrote a function which sorts the points by X and Y coordinates and re-orders them so that they are always clockwise starting from the top-left corner.

Next Week

Team Goal: Work on pre-processing so we have a standardized way of reducing noise in the cards.

Personal Goal: Run card localization in realtime on the OpenMV board to determine FPS performance.

Overall, this week's progress went better than anticipated, as I was able to localize several cards at once instead of just one.

Going into next week, my and Bradley's team goal is to standardize our pre-processing steps since a lot of it is redundant. For example, if I perform thresholding and blurring as part of localization, but only give him the final warped portion of the original image, he may need to do blurring and thresholding again. Reducing redundant processing steps will help our system run faster.

My personal goal will be to take what I have currently running on Google Colab code and port it to the OpenMV H7 board. I am a bit wary of how many frames per second the board will be able to keep up with, since all the above processing steps need to be done each frame, for each card in the frame. In any case, that is a challenge for next week :)

Comments