Week 3 Update

- Tyler Price

- Jan 30, 2023

- 5 min read

This Week

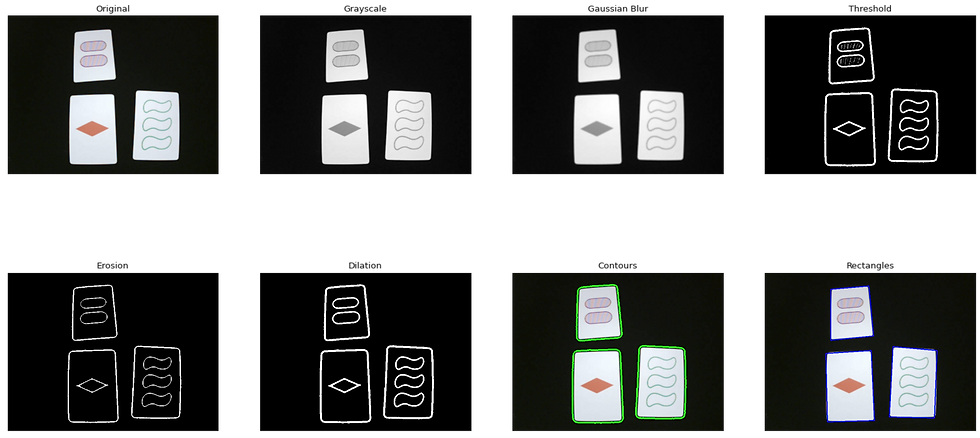

Team Goal: Work on pre-processing so we have a standardized way of reducing noise in the cards.

Personal Goal: Run card localization in real-time on the OpenMV board to determine FPS performance.

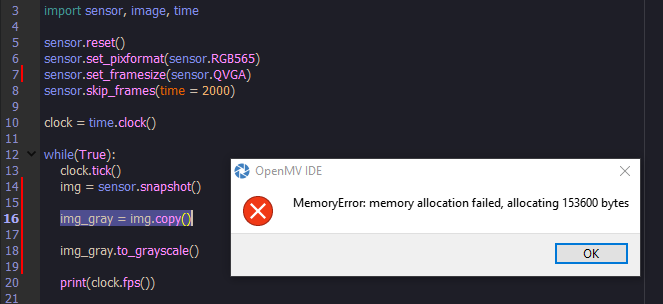

My goal for the week was to take the localization code I had written last week in Colab and get it working on the OpenMV board. I immediately ran into two roadblocks:

OpenCV is NOT supported on the OpenMV board. Several, but not all, OpenCV functions are implemented in OpenMV's own image library, which is specifically optimized to run on microcontrollers.

The OpenMV board does not have a lot of memory. Like, at all.

Regarding the second point, Doing so much as creating a copy of the picture from the sensor would cause a crash. The sensor resolution had to be reduced to 160x120 to fix the issue. At the moment, we've deemed creating a copy a necessary step, since the localization preprocessing steps outlined in last week's post would destroy color and pattern information if done on the original sensor image itself. I suspect low resolution would pose its own problems, however, possibly reducing the accuracy of Bradley's infill detection.

A temporary workaround for now is to create a downscaled copy of the frame which is used for localization, and then upscaling the corner coordinates for the final perspective transform.

Regarding the first point, after realizing OpenCV was not supported on the OpenMV board, I spent some time experimenting with the functions in the OpenMV image library to see if similar functionality could be achieved.

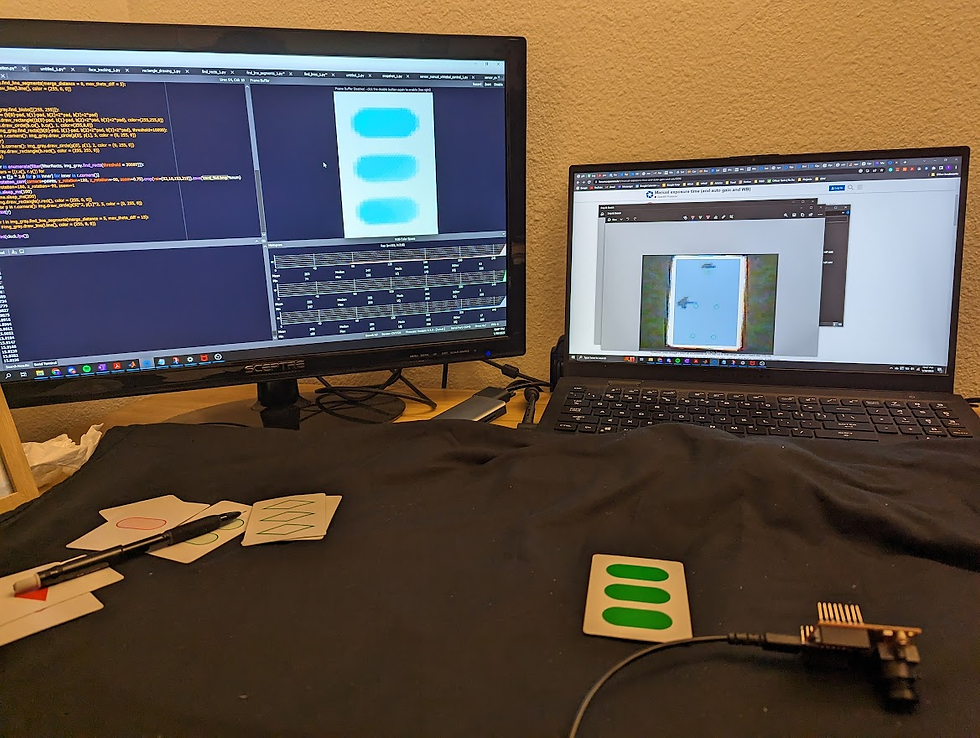

Converting to grayscale is trivial in OpenMV using the to_grayscale() function. Gaussian blurring and adaptive thresholding are combined into a single step in OpenMV using its gaussian() function. Instead of a sigma value, it takes in a multiplier and offset argument. After some playing around, I found a mul value of 1 and offset of 6 produced the below thresholded image. I was pleased to see it looked good across different viewing angles and lighting conditions. The non-solid infill shapes were filtered out while the solid ones weren't, but this doesn't affect localization of the card boundary.

OpenMV offers a find_blobs and find_rects function. The find_rects function appears to work well when up close to a card, accurately locating the corner positions. However, it is inconsistent across frames when the entire playing area is in view:

The find_blobs() function has the reverse problem: it is very consistent across frames, accurately identifying the 12 different cards all at once. However, the blob.corners() function gave very unreliable and inaccurate results, sometimes placing corners on top of each other or along the sides of the card:

The flickering problem of find_rects() is not too large of a problem, as long as there is a reliable way to tell where each card is. The first frame on which a particular card is found by find_rects(), it can be successfully classified and recorded along with its position. Even if it is not found in following frames, the program will assume the card is still in that position, until it sees a different card in the same spot, in which case it will overwrite the old classification.

To reliably determine card position within the 3x4 grid of cards, find_blobs() can be used to find the center points of each card blob, and then when a card is found using find_rects(), its position will simply be whichever of the 12 points it overlaps. In the below video, a + is drawn at the center of each card, which is stable between frames, while the corners of the different rectangles flicker between frames:

The last step was to perform perspective correction on the cards. In this blog post, I focus on extracting a single card, since the functions to do the warping affect the original image. This means warping multiple cards will require investigating reading and writing to an SD card, as there is not enough memory to create a copy in RAM of the original image.

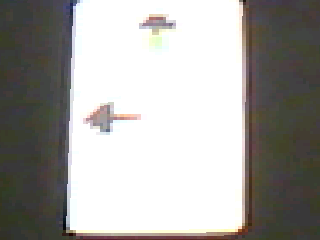

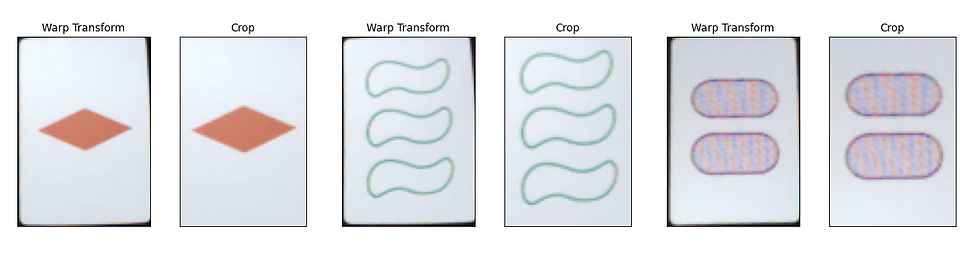

OpenMV has a function called rotation_corr() into which the corner points of the card rectangles can be passed which performs the warping. I additionally use the crop() function to zoom in past the slivers of background around the card edges, as well as set the image size to 60x90. By doing this, I was able to successfully isolate the image as below:

Originally the image was rotated and flipped in the wrong orientation, so I created a "calibration" SET card by cutting out an index card to the same size and drawing arrows on the top and left sides.

Original orientation:

Fixed orientation:

The below table summarizes the OpenMV "equivalents" of the OpenCV functions used in last week's Google Colab notebook:

Function | OpenCV | OpenMV |

Convert to grayscale | cvtColor | to_grayscale |

Gaussian Blur | GaussianBlur | gaussian |

Adaptive Threshold | adaptiveThreshold | gaussian |

Find card edge contour | findContours | find_rects |

Find corner points | approxPolyDP | find_rects |

Perspective Transform | warpPerspective | rotation_corr |

Week 3 Milestone Reflection

At the start of this project, my partner Bradley and I set the following milestone goals for week 3:

Have a working algorithm that can classify an image of a card

Be able to successfully read data from the camera onto the H7

I took responsibility for the second goal, and Bradley the first. At the end of week 3, we successfully completed both goals! He has been successful with developing a classification algorithm for each card attribute, using test images from a public Github repository containing training images of each card, which he resized to 60x90 (read more about his classification algorithm on his blog!).

Reading data from the camera onto the H7 ended up being trivial, thanks to the comprehensive libraries and Micro Python environment provided for the OpenMV board.

Upon realizing this, we revised our task assignment to put me in charge of card localization on the OpenMV H7 board. Last week, I was successful in getting an algorithm working in a Google Colab notebook to take an image saved from the OpenMV board and localize each card:

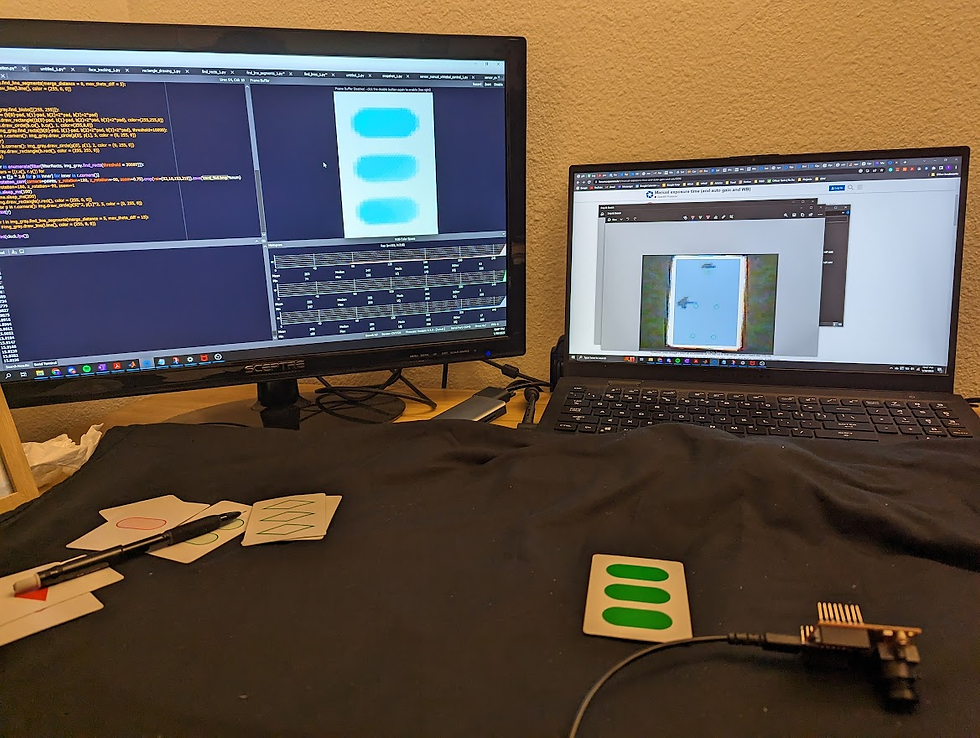

This week, I was able to get the algorithm working on the OpenMV board, although thus far it is only able to localize one card at a time due to memory constraints:

Our original week 7 milestones were as follows:

Algorithm for combined segmentation and classification completed and ported over to H7

LED matrix is built and controllable through the H7

Given our progress thus far, we are confident that we can achieve our week 7 milestones on time. I will take responsibility for taking Bradley's classification code and combining it with my localization code on the OpenMV board and implementing the game logic, while he will construct an LED matrix to indicate the location of SETs to the user.

Next Week

Team Goal: Get the combined classification system functioning on the H7

Personal Goal: Enable localization of multiple cards by using SD card to store images, and implement Bradley's classification code on the OpenMV board using OpenMV image library functions

My first goal for next week will be getting localization working with more than one card at a time, which will likely require investigating reading and writing images to the SD card. Once this is done, I will be ready to take Bradley's code and port it into the OpenMV environment.

Hmm ... the OpenMV camera board has an H7 processor on it. The H7 has more memory than 153KB. Is it all taken up with stuff that supports the camera? On the bottom is a slot for a MicroSD memory board. It can hold a File System. Maybe store stuff there?

Local filesystem and SD card¶

There is a small internal filesystem (a drive) on the openmvcam which is stored within the microcontroller’s flash memory.

When the openmvcam boots up, it needs to choose a filesystem to boot from. If there is no SD card, then it uses the internal filesystem as the boot filesystem, otherwise, it uses the SD card. After the boot, the current directory is set to…